The forceful and insistent application of gender stereotypes by Google Translate

Published:

Note: This article has been co-authored by Sreyasha Sengupta, Data Scientist, Pianist and an old, gold friend.

Algorithmic bias and prejudice is nothing new to those who have been following the research along these lines over the past decade or so. The reality of natural language as source data is oftentimes in its subjective nature, and also representative of a particular country, culture, sociological and colloquial factors — and of course, its prejudices and stereotypes. In this article, we aim to explore these biases as perpetuated by Google Translate, one of the most common language translation tools used across the world from the lens of gender.

Google Translate utilizes a Neural Machine Translation system which aims to translate sentences as a whole, instead of their composite part, in a similar fashion to humans manually translating between languages. It aims to apply broader human contexts to translation, which is likely the cause of its encoding of biases. Since such systems are trained on human-validated data, it is likely that human biases and understandings of gender stereotypes are embedded deep into the systems’ workings. This not only results in creating a system that is biased on a translation level, it also reinforces the very existence of it by continuing a vicious cycle of human-to-machine transfer of subjective knowledge, where the foundational conceptions of ethics and morality are lost in the mathematics.

We will demonstrate this by employing translations into our mother tongue: Bengali/Bangla. Without delving into much detail about the constitution of languages, one key differentiating feature between English and Bengali is in their usages of pronouns. While the English language employs different gendered pronouns (‘he’ / ‘she’) and gender-neutral pronouns (‘they’ / ‘them’), Bengali uses only gender-neutral pronouns: সে (pronounced ‘shey’) ও (pronounced ‘o’) , and others. Thus, gendered and gender-neutral English texts can easily be translated into Bengali without loss of information, and translating texts containing gender-neutral pronouns can easily be translated into gender-neutral English. However, the contrary is often difficult, as it is almost conditioned in our English grammar to assign gendered pronouns, even while translating from a gender-ambiguous language.

This distinction is, unfortunately, also carried by Google Translate. When translating from our native tongue to English, it not only assigns gender to gender neutral pronouns, it does so by enforcing a large range of gender stereotypes. Below are a few examples of this activity.

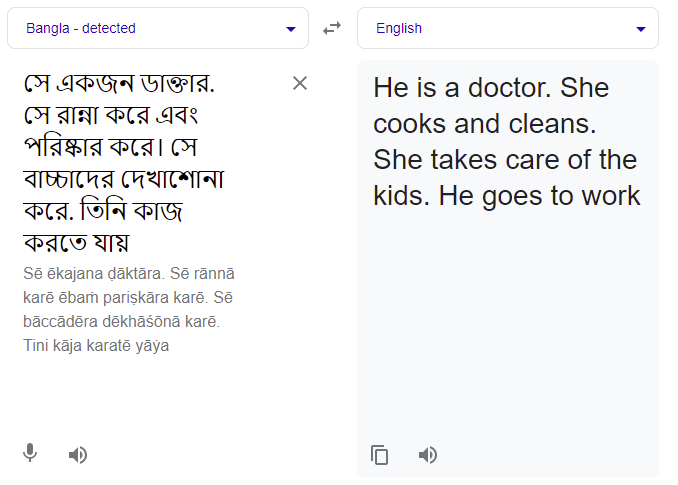

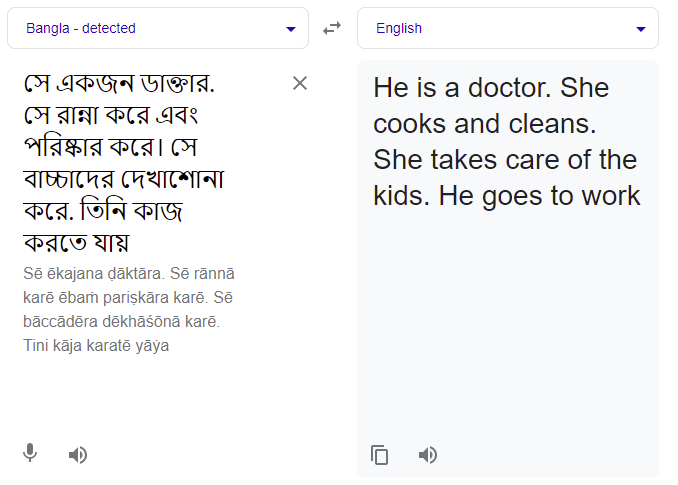

Example 1:

In this example, there is no additional information in the Bengali source text to infer preferred gender pronouns of the person or persons being written about. But, Google Translate affixes the gender stereotypes that doctors and those who go to work must be men, while those who cook and clean and care for the kids should be women. Theoretically looking, this translation is not incorrect — the NMT has assigned a gender to every pronoun, and there are a few permutations to do so. However, a reasonable expected translation could be ‘They are a doctor. They cook and clean. They take care of the kids. They go to work’ — but the absence of this social sense make it unlikely for the models (and its creators) to understand that quantifiable accuracy does not capture social politic.

Example 2:

Like the previous example, the translation once again enforces a gendered stereotype in this example: that marriage is between a man and a woman, where the man asks for the woman’s hand in marriage. Yet, in the Bengali text, there is no such additional context about the people in question. The sentence in Bengali could easily have been referring to a woman asking the question, or between gender non-binary individuals. The lack of LGBTQ representation in all aspects of life, including artificial intelligence shouldn’t be lost on us.

Example 3:

In this final example, the translation embodies the gender stereotype that it is the masculine role to be a protector, shielding and caring for the feminine. As you can probably guess by now, the original sentence in Bengali makes no such claims of gender of the protector. It is fair to say that the data from which the Google translate learns from, is sufficiently fit into the parameters of male-female power dynamics that does exist in our society. Possibly the concept of accuracy and performance metrics need a broader, more involved technique than pure numbers.

This prejudice in Google Translate can and has been demonstrated to apply to a variety of languages with similar absences of gendered pronouns: Finnish, Turkish, Hungarian, Filipino, Malay, and Estonian, among others. In response to such discoveries by users, Google announced gender-neutral translations in 2020, which claims to be better at detecting the difference between gender-specific and gender-neutral contexts. However, these present results show that a lot more work is yet to be done before this problem can be resolved.

It is also prudent to end the article with this thought: where does the onus of creating unbiased, ethical and constructive artificial intelligence lie — on mathematics or on humans themselves?